简单记录一下问题的排除过程,和对应的处理方式!

- 今天在

Twitter上面看到laixintao发了一个小心,说的是发现在Python程序下直接调用系统命令时,如果返回信息量过大的话,子进程输出信息量stdout会把管道(PIPE)的缓冲器打满,导致主进程卡住。

- 大概看了下,使用

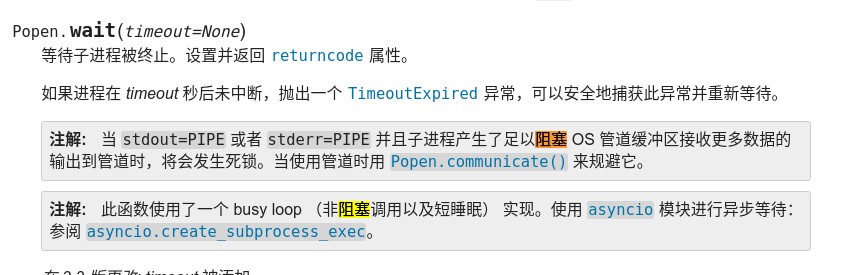

subprocess模块的Popen调用外部程序,如果stdout或stderr参数是pipe,并且程序输出超过操作系统的pipe size时,并且使用Popen.wait()方式等待程序结束获取返回值,会导致死锁,程序卡在wait()调用上。我们可以通过操作系统自带的命令,可以看到,linux的pipe默认值就是4M,所以超过这个值的话,就可能会出现子进程卡住的问题。像这样的问题,如果真的出现了,确实还是不太好定位原因的(不会有错误消息)。因为,你可以在网上搜了一圈都不知道该怎么解决,重点其实不是解决该问题的方法,而且不知道是这方面的原因。

# ubuntu

$ man 7 pip

PIPE_BUF

POSIX.1 says that write(2)s of less than PIPE_BUF bytes must be atomic: the output data is written

to the pipe as a contiguous sequence. Writes of more than PIPE_BUF bytes may be nonatomic: the kernel

may interleave the data with data written by other processes.

POSIX.1 requires PIPE_BUF to be at least 512 bytes. (On Linux, PIPE_BUF is 4096 bytes.) The precise

semantics depend on whether the file descriptor is nonblocking (O_NONBLOCK), whether there are multiple

writers to the pipe, and on n, the number of bytes to be writ‐ten:

O_NONBLOCK disabled, n <= PIPE_BUF

All n bytes are written atomically; write(2) may block if there is not room for n bytes to be written immediately

O_NONBLOCK enabled, n <= PIPE_BUF

If there is room to write n bytes to the pipe, then write(2) succeeds immediately, writing all n bytes;

otherwise write(2) fails, with errno set to EAGAIN.

O_NONBLOCK disabled, n > PIPE_BUF

The write is nonatomic: the data given to write(2) may be interleaved with write(2)s by other process;

the write(2) blocks until n bytes have been written.

O_NONBLOCK enabled, n > PIPE_BUF

If the pipe is full, then write(2) fails, with errno set to EAGAIN. Otherwise, from 1 to n bytes

may be written (i.e., a "partial write" may occur; the caller should check the return value from write(2)

to see how many bytes were actually written), and these bytes may be interleaved with writes by other processes.

- 我们知道在

Python 2.4添加了子进程模块,可以使用Popen来调用系统命令,得到对应命令的正常输出和异常输出。Popen()其实会执行fork()来创建一个子进程,在其中再执行对应命令。

from subprocess import Popen, PIPE

p = Popen(cmd, stdout=PIPE, stderr=PIPE)

(out,err) = p.wait()

- 但是,这里就有一个问题,其实在

Popen的文档里面已经有说明了:“注意数据读取是在内存中缓冲的,所以如果数据大小很大或者没有限制,不要使用这种方法”。显然,这个警告实际上意味着:“如果读取的数据有可能超过几页,那么这将使你的代码陷入死锁”。注意,这里是subprocess.wait才会卡住。

# Linux默认的pipe-size是64kb

from subprocess import Popen, PIPE

def dd_test(index, dd_size):

print(f'>>> [{index+1}] the dd sizs is {dd_size} to start...')

cmd = f'/bin/dd if=/dev/urandom bs=1024 count={dd_size} 2>/dev/null'

p = Popen(cmd, stdout=PIPE, stderr=PIPE, shell=True)

p.wait()

print(f'>>> [{index+1}] end the dd test.')

if __name__ == '__main__':

test_data_list = [63, 64, 65]

for index, dd_size in enumerate(test_data_list):

dd_test(index=index, dd_size=dd_size)

# 上述代码的输出信息

➜ python3 dd_test.py

>>> [1] the dd sizs is 63 to start...

>>> [1] end the dd test.

>>> [2] the dd sizs is 64 to start...

>>> [2] end the dd test.

>>> [3] the dd sizs is 65 to start...

- 其实,这个问题的解决方案非常简单,就是将

stdout和stderr不要设置为使用PIPE,而是使用适当的文件对象。这些对象将接受合理数量的数据,而不是4M。- 使用

communicate()方法 - 使用

StringIO库

- 使用

from subprocess import Popen, PIPE

p = Popen(cmd, stdout=PIPE, stderr=PIPE)

(out,err) = p.communicate()

from subprocess import Popen, PIPE

def run(self, cmd):

out = StringIO()

p = Popen(cmd, stderr=PIPE, stdout=PIPE, stdin=PIPE, shell=True)

p_return_code = p.returncode

while p_return_code is None:

data = p.stdout.read()

out.write(data)

p.poll()

if p_return_code != 0:

print(f'Run `{cmd}` with exit code {p_return_code} ...\n{p.stderr.read()}\n')

os.sys.exit(p_return_code)

return out.getvalue()